Prompt Evaluation

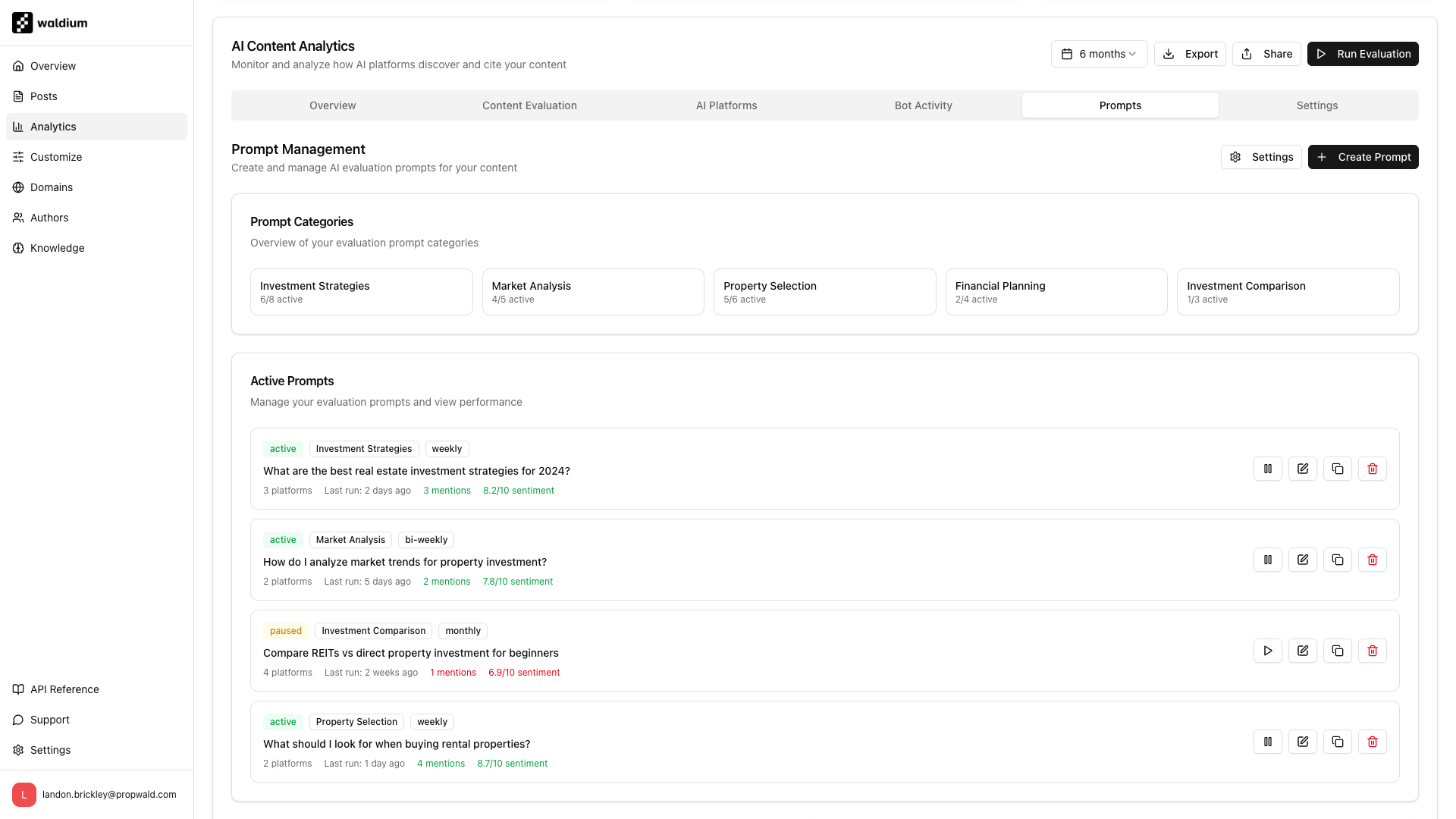

The only way to know if your content is working is to test it. Create a prompt bank to systematically evaluate how often AI systems cite your content across different topics and platforms.

Waldium's analytics dashboard automatically tracks AI citations, but you can also manually test specific prompts to evaluate content performance.

Prompt evaluation is the process of testing how AI systems respond to questions about your industry and measuring whether they cite your content. It's the most direct way to measure your AI visibility.

What you're measuring:

- Citation rate - How often AI systems mention your content

- Brand mentions - Direct references to your company or products

- Response quality - How well AI systems represent your expertise

- Platform performance - Which AI systems cite you most often

Creating Your Prompt Bank

Build a library of test prompts for your core topics based on your content areas and target audience questions. Examples:

- "What are the best tenant screening practices for rental properties?"

- "How do I calculate ROI on rental property investments?"

- "What should I look for when buying rental properties?"

- "How do I handle tenant disputes and evictions?"

- "What are the tax implications of rental property ownership?"

Organize by Categories

Structure your prompts to test different aspects of your expertise:

- How-to guides - "How do I screen tenants for rental properties?"

- Best practices - "What are the best property management software options?"

- Comparisons - "REITs vs direct property investment: which is better?"

- Problem-solving - "How do I handle difficult tenants who don't pay rent?"

- Industry trends - "What are the latest real estate investment trends for 2024?"

Test Different Question Formats

AI systems respond differently to various question types:

Question formats to test:

- Direct questions - "What is the best way to screen tenants?"

- Comparison questions - "Property management software: which is best?"

- Problem-solving - "How do I handle tenant disputes?"

- Trend questions - "What are the latest property investment trends?"

- Beginner questions - "How do I start investing in rental properties?"

Testing Your Prompts

Test each prompt across multiple AI platforms to get comprehensive data:

Testing workflow:

- Ask the question - Use your exact prompt in each AI system

- Record the response - Copy the full AI response

- Check for citations - Look for mentions of your content or brand

- Rate the quality - How well does the response represent your expertise?

- Track results - Log findings in your evaluation spreadsheet

AI Platforms to Test

- ChatGPT - Most popular, good for general questions

- Claude - Strong for detailed analysis and comparisons

- Google AI Overviews - Appears in search results

- Perplexity - Cites sources more frequently

Testing Frequency

- Weekly - Test your top 10 prompts

- Monthly - Full evaluation of all prompts

- After publishing - Test new content within 48 hours

What to Look For

When evaluating AI responses, focus on these key metrics:

Citation indicators:

- Direct mentions - Your company name or brand

- Content references - Links to your blog posts or guides

- Expertise recognition - AI acknowledging your authority

- Competitive mentions - How you compare to competitors

Quality signals:

- Accuracy - Does the response reflect your actual expertise?

- Completeness - Does it cover the topic thoroughly?

- Helpfulness - Would users find this response valuable?

Measuring Performance

Citation Rate Calculation

Track how often your content gets cited across all your test prompts:

Citation rate formula:

Citation Rate = (Prompts with Citations / Total Prompts Tested) × 100

Example:

- Test 20 prompts across 4 platforms = 80 total tests

- Get 12 citations = 15% citation rate

Platform Performance

Compare how different AI systems perform for your content:

Platform metrics:

- ChatGPT - 8/20 prompts cited (40% rate)

- Claude - 6/20 prompts cited (30% rate)

- Google AI - 4/20 prompts cited (20% rate)

- Perplexity - 10/20 prompts cited (50% rate)

Content Performance

Identify which types of content get cited most often:

High-performing content:

- Comprehensive guides - "Complete Guide to Tenant Screening"

- Problem-solving posts - "How to Handle Difficult Tenants"

- Industry analysis - "2024 Property Investment Trends"

Low-performing content:

- Short tips - "5 Quick Property Management Tips"

- Generic advice - "Property Management Basics"

- Outdated content - Posts older than 6 months

Optimization Strategies: Improve Citation Rates

Use your evaluation data to optimize content for better AI visibility:

Content improvements:

- Expand thin content - Turn short posts into comprehensive guides

- Add specific examples - Include concrete details and case studies

- Update outdated posts - Refresh old content with current information

- Create missing content - Fill gaps identified in your testing

Platform-Specific Optimization

Tailor your content strategy based on platform performance:

For ChatGPT (general questions):

- Focus on comprehensive, beginner-friendly content

- Use clear, accessible language

- Include step-by-step instructions

For Claude (detailed analysis):

- Create in-depth comparisons and analysis

- Include data and statistics

- Provide nuanced perspectives on complex topics

For Perplexity (source citations):

- Ensure your content is well-sourced

- Include references and citations

- Make your expertise clearly evident

Competitive Analysis

Monitor how competitors perform in your prompt tests:

Competitive tracking:

- Brand mentions - How often competitors get cited

- Content gaps - Topics where competitors outperform you

- Opportunity identification - Areas where you can gain advantage

Waldium Analytics Dashboard

Use Waldium's built-in analytics to automate prompt evaluation:

Automatic tracking:

- Citation monitoring - Real-time tracking of AI mentions

- Performance alerts - Notifications when citation rates change

- Trend analysis - Historical data on citation performance

- Competitive monitoring - Track competitor mentions

Manual Testing Tools

For detailed evaluation, use these tools:

Testing spreadsheet:

- Prompt bank - Organized list of test questions

- Results tracking - Citation rates and response quality

- Performance metrics - Platform and content performance

- Trend analysis - Changes over time

Testing schedule:

- Daily - Test 2-3 new prompts

- Weekly - Review top-performing prompts

- Monthly - Full evaluation and optimization